如需转载,请根据 知识共享署名-非商业性使用-相同方式共享 4.0 国际许可协议 许可,附上本文作者及链接。

本文作者: 陈进涛

作者昵称: 江上轻烟

本文链接: https://zhizhi123.com/2021/01/11/Service-Registry-Discovery/

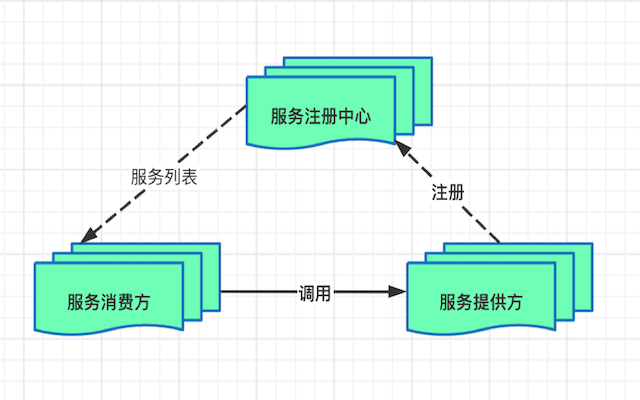

什么是注册中心

注册中心可以说是 一个“通讯录”,它记录了服务和服务地址的映射关系。在服务启动时,服务会注册到这里,当服务需要调用其它服务时,就到这里找到服务的地址,进行调用。

常见的注册中心及对比

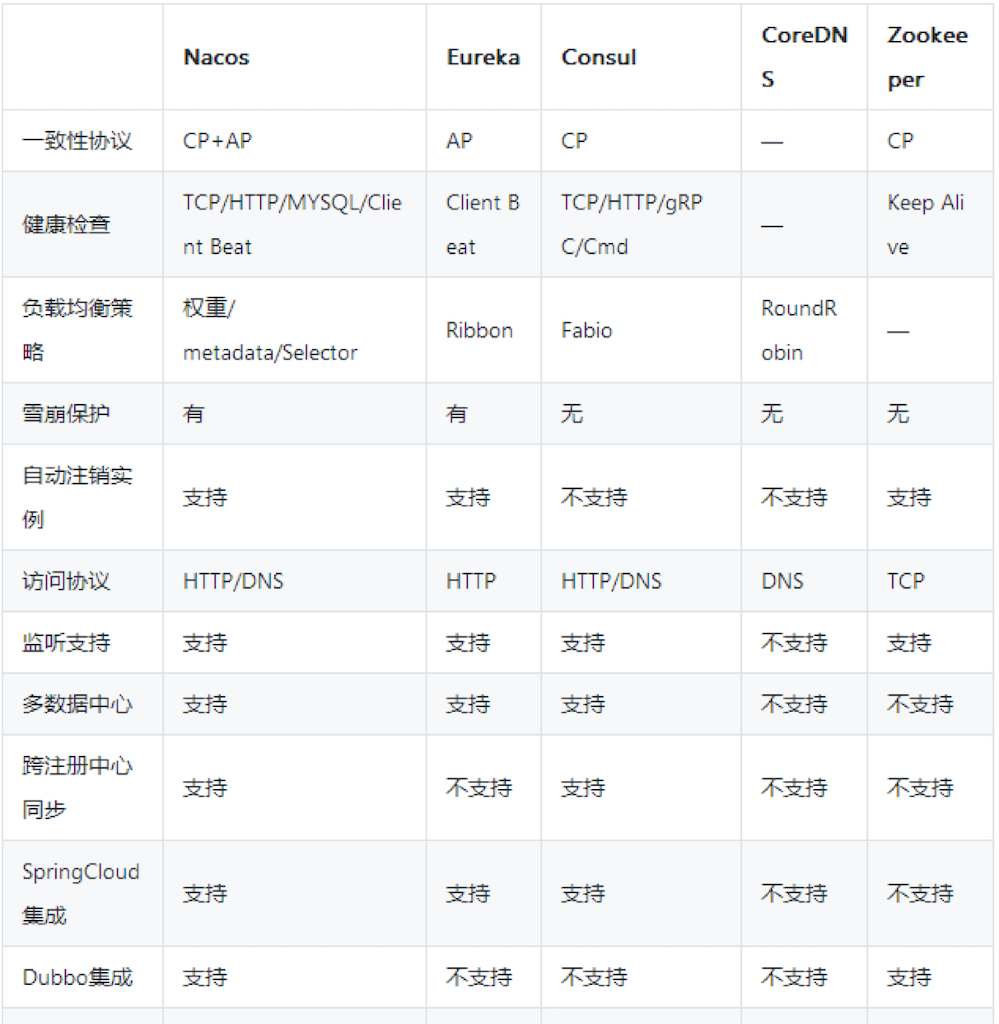

业界有许多成熟的注册中心实现,下图中对一些常见的注册中心做一对比:

ZooKeeper是一款经典的服务注册中心产品(虽然它最初的定位并不在于此),在很长一段时间里,它是国人在提起RPC服务注册中心时心里想到的唯一选择,这很大程度上与Dubbo在中国的普及程度有关。

Consul和Eureka都出现于2014年,Consul在设计上把很多分布式服务治理上要用到的功能都包含在内,可以支持服务注册、健康检查、配置管理、Service Mesh等。而Eureka则借着微服务概念的流行,与SpringCloud生态的深度结合,也获取了大量的用户。

去年开源的Nacos,则携带着阿里巴巴大规模服务生产经验,试图在服务注册和配置管理这个市场上,提供给用户一个新的选择。

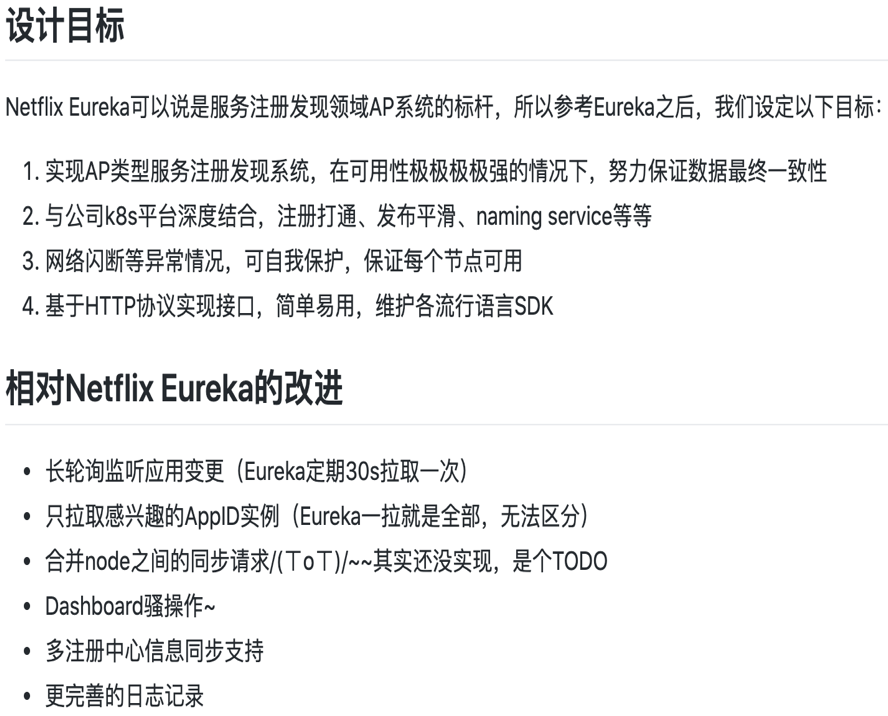

而本文要介绍的discovery是Eureka的go语言实现版本,由bilibili开发并开源。

Discovery的设计与实现

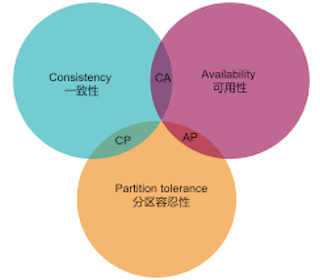

CP VS AP

在大多数分布式环境中,尤其是涉及到数据存储的场景,数据一致性应该是首先被保证的,这也是zookeeper设计成CP的原因。但是对于服务发现场景来说,情况就不太一样了:针对同一个服务,即使注册中心的不同节点保存的服务提供者信息不尽相同,也并不会造成灾难性的后果。因为对于服务消费者来说,能消费才是最重要的———拿到可能不正确的服务实例信息后尝试消费一下,也好过因为无法获取实例信息而不去消费。 (尝试一下可以快速失败,之后可以快速重试)所以,对于服务发现而言,可用性比数据一致性更加重要——AP胜过CP。

设计目标与改进

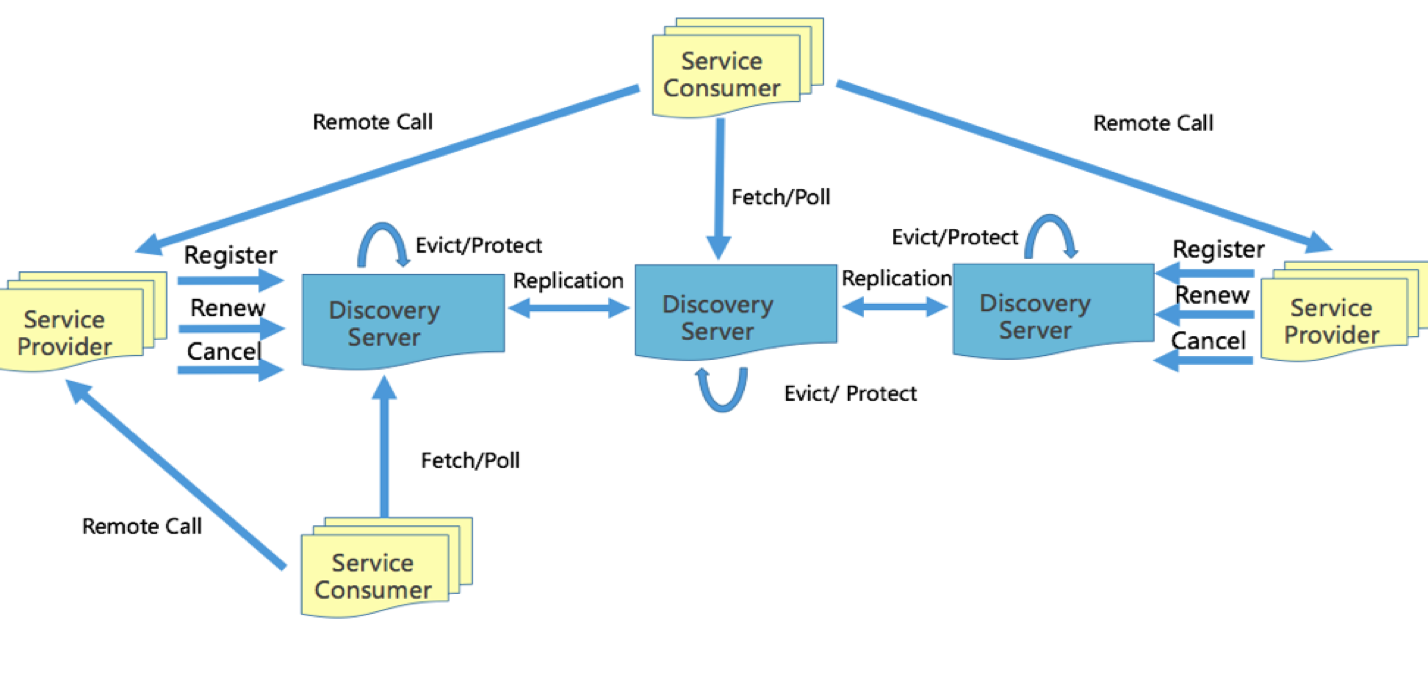

架构图

1.通过AppID(服务名)和hostname定位实例

2.Node: discovery server节点

3.Provider: 服务提供者,目前托管给k8s平台,容器启动后发起register请求给Discover server,后定期(30s)心跳一次

4.Consumer: 启动时拉取node节点信息,后随机选择一个node发起long polling(30s一次)拉取服务instances列表

5.Instance: 保存在node内存中的AppID对应的容器节点信息,包含hostname/ip/service等

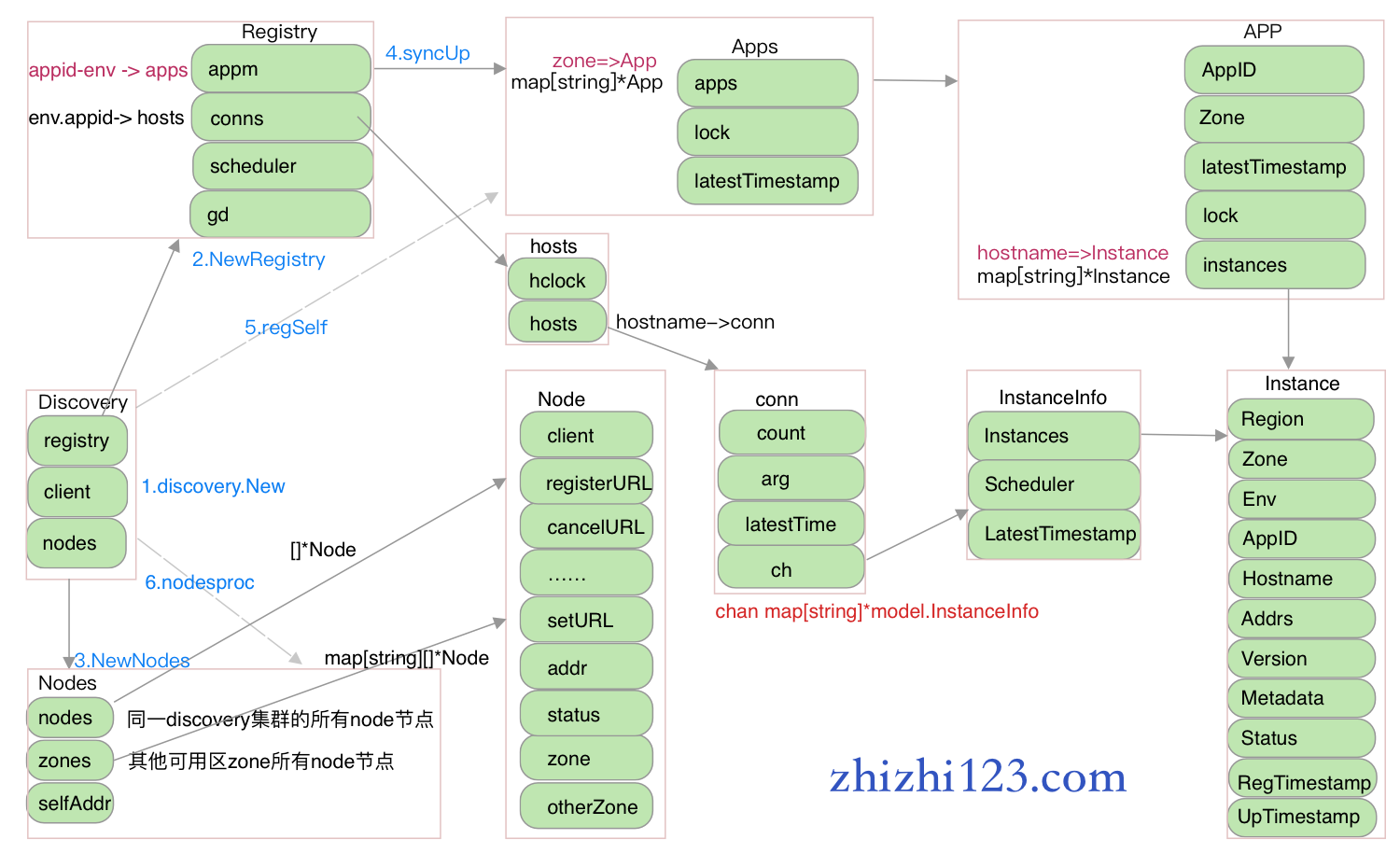

存储结构

上图中列出了discovery的主要存储结构,并用数字标出了服务启动后的一些主要过程,下边将会注意做详细讲解。

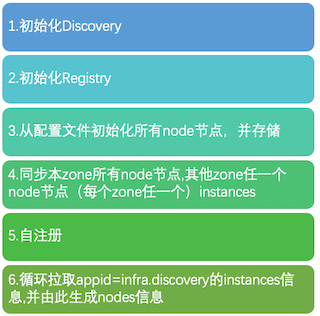

初始化过程

步骤1,2初始化了对应的内存结构,这里不再细讲,我们从第三步说起:

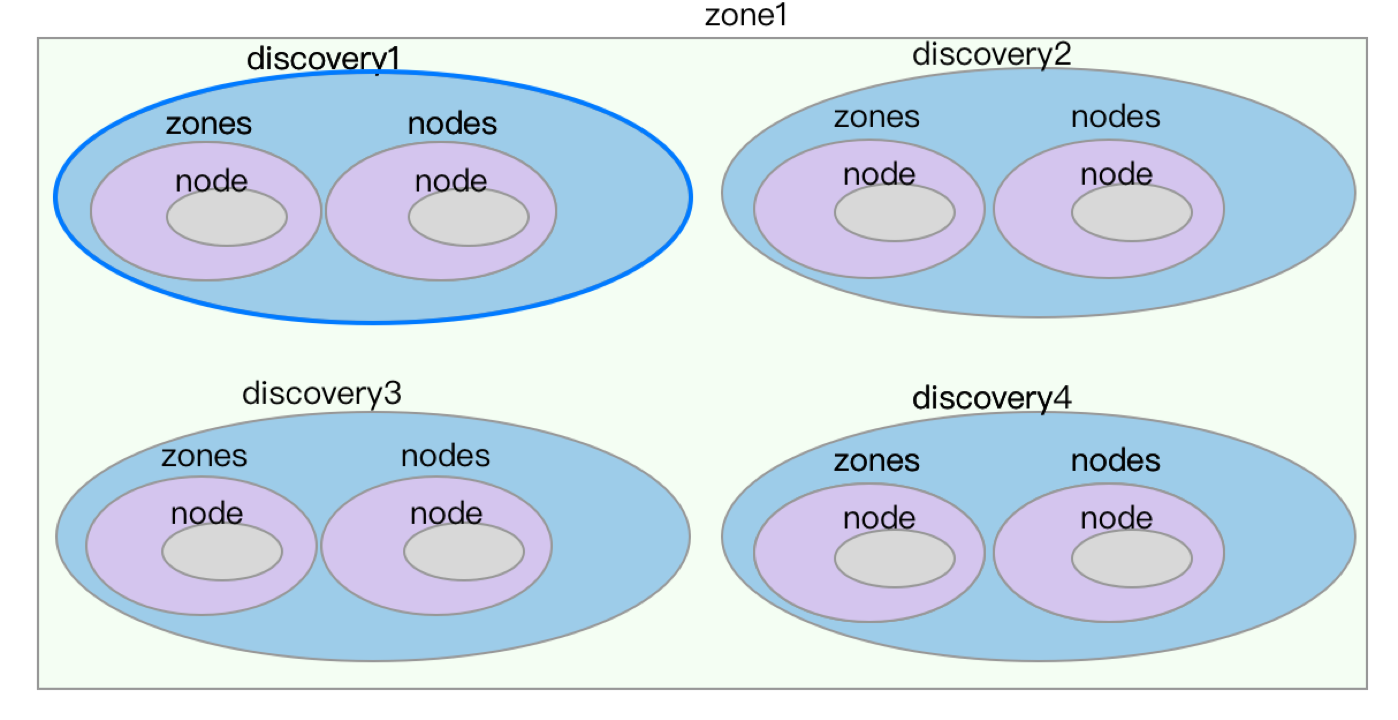

1. Node节点

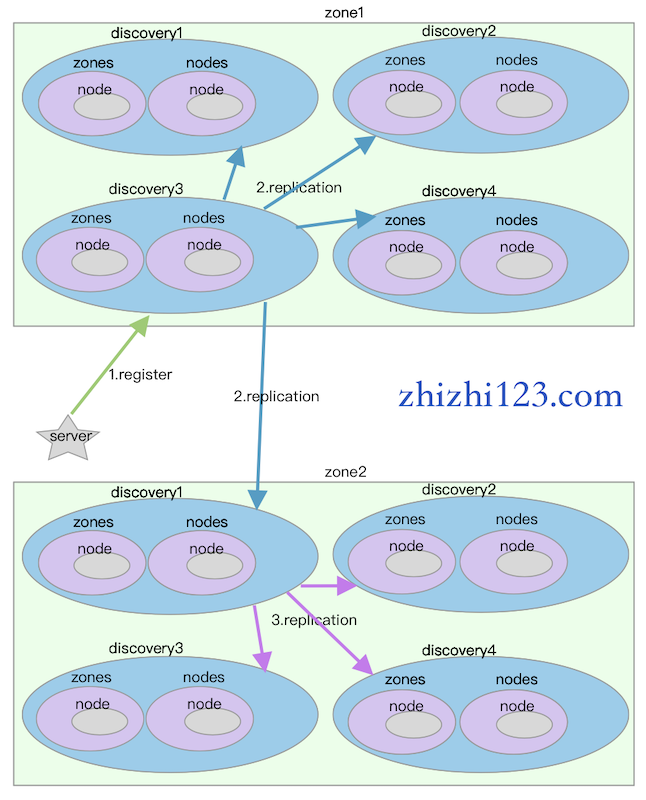

每个node节点代表一个discovery服务实例,每个中都存储着服务的所有node节点信息。示例图如下:

节点初始化代码如下:

1 | func NewNodes(c *conf.Config) *Nodes { |

文件:registry/nodes.go

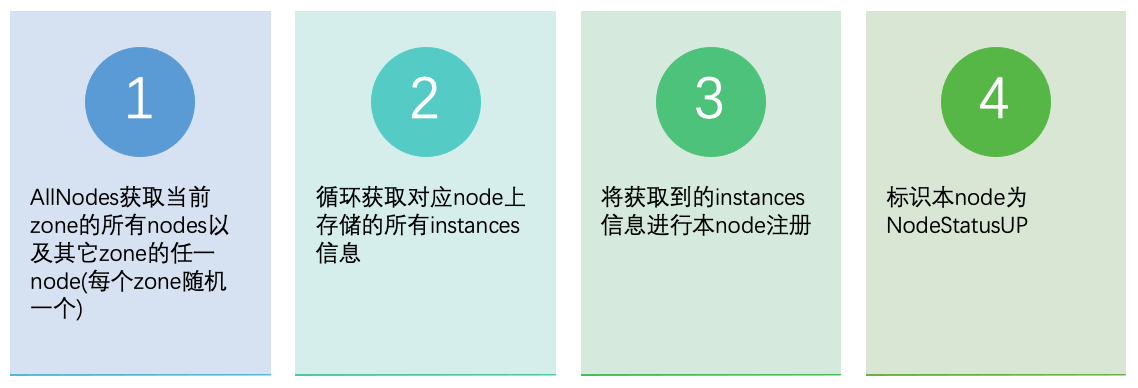

2. syncup

1 | func (d *Discovery) syncUp() { |

文件:discovery/syncup.go

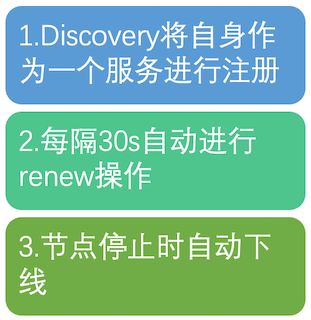

3.自注册

1 | func (d *Discovery) regSelf() context.CancelFunc { |

文件:discovery/syncup.go

4.维护nodes节点

循环拉取appid=infra.discovery的instances信息,并由此生成nodes信息

1 | func (d *Discovery) nodesproc() { |

文件:discovery/syncup.go

主要操作

1.注册、心跳、下线

注册、更新、下线的过程类似,都是现在本节点处理(上图过程1),再扩散至本zone所有节点以及其他每个zone任一节点(过程2),最后扩散到其他zone的其他节点(过程3)

主要代码如下(以注册过程为例):

相关路由定义(其中也包括了获取实例的路由):

1 | func innerRouter(e *bm.Engine) { |

文件:http/http.go

http注册接口:

1 | func register(c *bm.Context) { |

文件:http/discovery.go

执行注册:

1 | // Register a new instance. |

文件:discovery/register.go

本节点注册(过程1):

1 | // Register a new instance. |

文件:registry/registry.go

节点扩散:

1 | // Replicate replicate information to all nodes except for this node. |

action方法:

1 | func (ns *Nodes) action(c context.Context, eg *errgroup.Group, action model.Action, n *Node, i *model.Instance) { |

文件:registry/nodes.go

其他node注册:

1 | // Register send the registration information of Instance receiving by this node to the peer node represented. |

call方法:

1 | func (n *Node) call(c context.Context, action model.Action, i *model.Instance, uri string, data interface{}) (err error) { |

文件:registry/node.go

上述代码的两个关键参数要尤其留意,正是这两个参数保证了操作正确地扩散到其他node节点

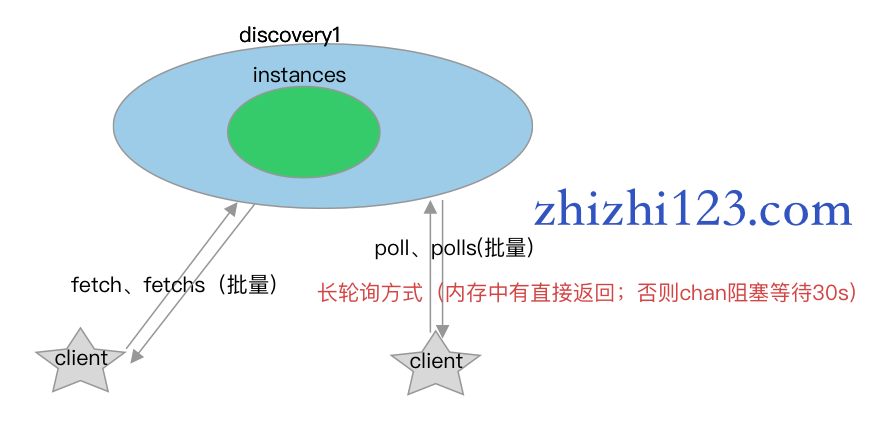

2.获取实例

1.内存结构Registry.conns->hosts->conn维护了阻塞等待instances的连接信息

2.注册、下线、修改等操作会执行broadcast,broadcast会遍历所有的conn并向对应chan发送instances信息

下面以polls为例说明:

polls接口:

1 | func polls(c *bm.Context) { |

文件:http/discovery.go

dis.Polls:

1 | // Polls hangs request and then write instances when that has changes, or return NotModified. |

文件:discovery/register.go

registry.Polls:

1 | // Polls hangs request and then write instances when that has changes, or return NotModified. |

broadcast方法:

1 | // broadcast on poll by chan. |

文件:registry/registry.go

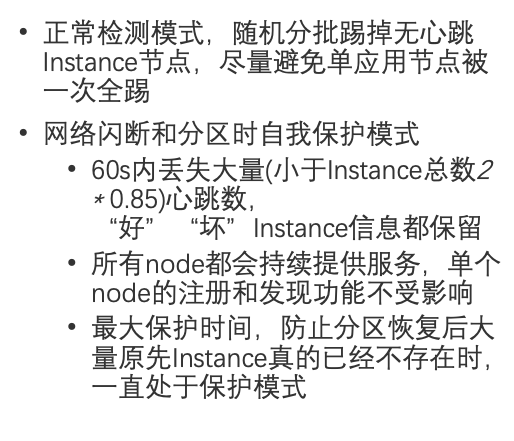

3.节点剔除与闪断保护

1.正常情况下,一个服务实例(instance)一分钟内会有两次renew操作

2.最大保护时间为1个小时

剔除无效节点代码:

1 | func (r *Registry) evict() { |

文件:registry/registry.go

每分钟renew操作累加、重置、闪断保护等功能:registry/guard.go

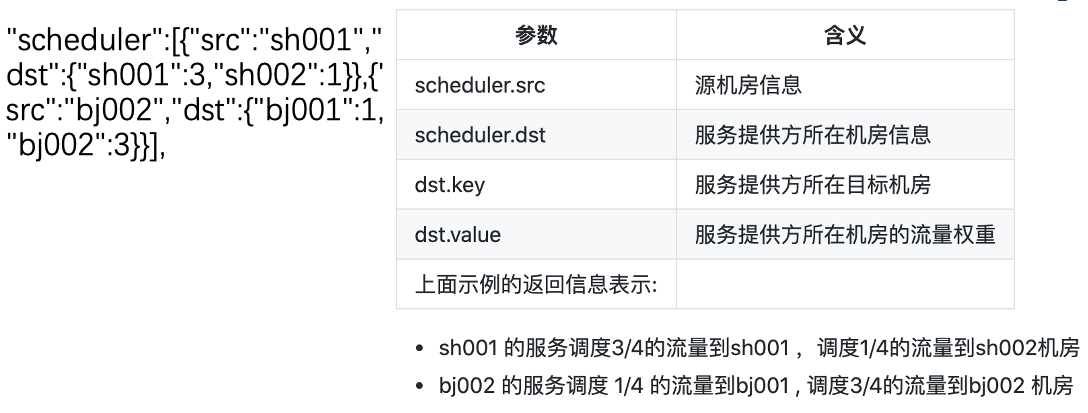

4.流量调度

1.通过调度信息,重新计算对应zone中各实例权重值

2.新的权重 = 调度权重 * 原始zone权重之积 * 原实例权重 / 原始zone权重之和

权重计算代码:

1 | func (insInf *InstancesInfo) UseScheduler(zone string) (inss []*Instance) { |

文件:naming/naming.go

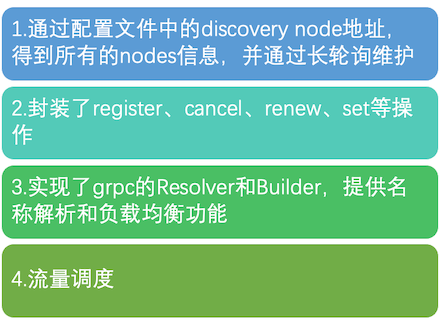

5.Client SDK

目录naming中为Client SDK的代码,这里就不再一一列出。

有关grpc的负载均衡、名称解析,可以查看博文:

参考资料

1.Eureka! Why You Shouldn’t Use ZooKeeper for Service Discovery

2.bilibili/discovery 介绍与源代码分析 (一)

3.bilibili/discovery 介绍与源代码分析 (二)

-------------本文结束,感谢您的阅读-------------

本文链接: https://zhizhi123.com/2021/01/11/Service-Registry-Discovery/

版权声明: 本作品采用 署名—非商业性使用—相同方式共享 4.0 协议国际版 进行许可。转载请注明出处!

评论列表

发表评论